Offline-First AI Assistants: Faster AI Without Cloud Dependence

Offline-First AI Assistants are intelligent tools that work without relying on the internet or cloud servers. Instead of sending your data to remote servers for processing, these AI assistants perform computations directly on your device – offering faster responses, enhanced privacy, and uninterrupted performance. They’re the next evolution of smart assistants, designed to give users more control, speed, and security without constant connectivity.

What Are Offline-First AI Assistants?

Offline-First AI Assistants are digital assistants built with on-device intelligence. Unlike traditional AI systems like Siri, Alexa, or Google Assistant, which depend on cloud-based models to process voice commands or generate responses, offline-first systems run computations locally.

That means when you ask your AI to summarize a note, translate a sentence, or organize your tasks, it doesn’t need to send your data to a data center thousands of miles away—it processes it right on your phone, laptop, or wearable device.

This “offline-first” design makes AI assistants not just faster and more private, but also more reliable in areas with poor internet connectivity.

Why the World Is Moving Toward Offline AI

The shift toward offline-first AI isn’t accidental—it’s a response to growing user demands for privacy, control, and speed. Cloud AI was revolutionary, but it also introduced several issues:

- Privacy Risks: Every cloud query means sending user data to remote servers.

- Latency: Even with fast networks, sending and receiving data adds delay.

- Dependency on Internet: Without Wi-Fi or mobile data, cloud AI stops working.

- Energy Costs: Constant data transmission consumes more energy and bandwidth.

Offline-first models fix all these problems by running AI locally. With advances in edge computing and compact neural models, developers can now fit powerful AI capabilities into small devices.

How Offline-First AI Works

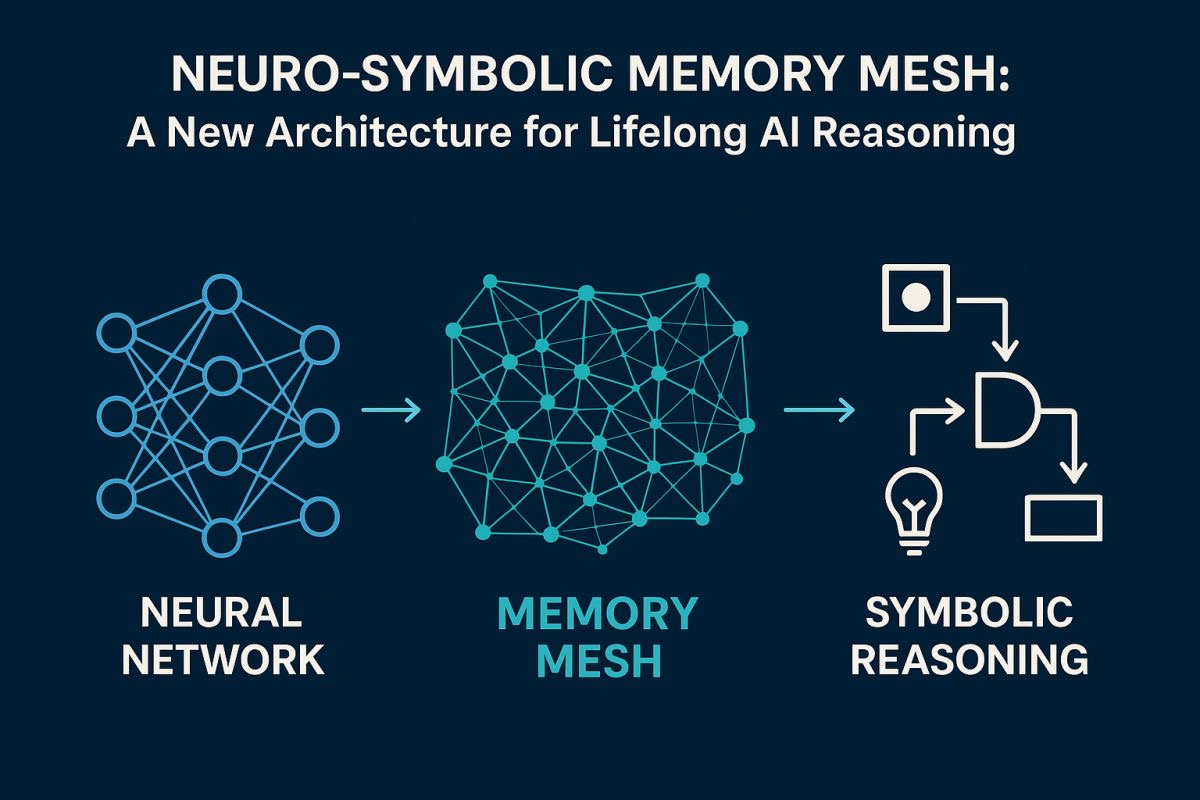

Offline-first AI relies on a blend of edge computing, neural compression, and optimized hardware. Here’s a simplified breakdown of the process:

- Model Compression: Large AI models are trimmed and optimized so they can run efficiently on devices with limited power.

- On-Device Processing: Tasks like text generation, speech recognition, and translation happen within the device’s processor.

- Selective Cloud Syncing: When updates or larger tasks are needed, the system can occasionally connect to the cloud, but it’s not required for daily use.

This architecture allows for instant responses and data security, since user information never leaves the device unless explicitly authorized.

Key Benefits of Offline-First AI Assistants

1. Speed and Low Latency

Without the round-trip delay to cloud servers, responses are almost instantaneous. You can talk, type, or interact with your assistant and get results in milliseconds.

2. Privacy and Data Control

Your voice, photos, and notes stay on your device. Nothing is uploaded, analyzed, or stored by third-party servers—making these systems ideal for users concerned about surveillance or data misuse.

3. Offline Accessibility

Whether you’re on a plane, hiking, or in an area with weak connectivity, your AI still works seamlessly. This reliability is a massive leap for remote workers, travelers, and rural users.

4. Energy and Cost Efficiency

By reducing network calls, devices consume less energy and users avoid unnecessary data costs. Companies can also cut down on expensive cloud infrastructure.

5. Security Against Data Breaches

Since sensitive data never leaves your device, the risk of leaks or hacking is drastically reduced. Offline-first AI essentially builds security by design.

Real-World Examples of Offline-First AI

- Apple’s On-Device Siri (2024 update) – Apple’s latest Siri update performs most tasks locally, such as setting reminders or launching apps, without cloud involvement.

- Rewind AI – A personal memory app that stores and processes all your interactions locally on your Mac, ensuring total privacy.

- Pi AI (Offline Mode) – Some emerging assistants offer hybrid functionality—using offline reasoning for basic tasks and online mode for complex questions.

- Open-Source Models on Mobile – Tools like LLM Studio, MLC AI, and Ollama enable users to run large language models (like LLaMA or Mistral) directly on their laptops or phones.

These examples show that offline-first AI isn’t science fiction—it’s already being implemented in products consumers use daily.

Challenges in Building Offline-First AI

Despite its potential, offline-first AI isn’t without challenges:

- Hardware Limitations: Running advanced AI models requires significant processing power and memory.

- Model Size: Compressing models without losing accuracy is a tough balance.

- Battery Usage: Continuous on-device computation can increase battery drain.

- Limited Features: Some assistants may offer fewer capabilities offline compared to cloud-connected ones.

However, with the rise of neural accelerators, optimized chips (like Apple’s Neural Engine or Qualcomm’s AI cores), and efficient model architectures (like quantized transformers), these challenges are rapidly shrinking.

The Future of Offline-First AI Assistants

The next generation of AI assistants will be hybrid – combining offline and online capabilities intelligently.

Here’s what the near future looks like:

- Personalized AI Models: Each device will train its own model tailored to your behavior and preferences.

- Federated Learning: Devices will learn collaboratively without sharing raw data.

- Smarter Edge Devices: Phones, wearables, and even vehicles will host powerful AIs locally.

- Ethical AI Design: Offline-first systems promote transparency and ethical AI usage by minimizing external data control.

In short, the offline-first revolution will make AI faster, more ethical, and more human-centered—giving users true ownership of their digital interactions.

Why Offline-First AI Matters for Users and Businesses

For users, it means privacy, independence, and trust. For businesses, it means lower server costs, compliance with data protection laws, and more reliable performance.

Offline-first systems can also open new markets—rural regions, developing countries, or industries where connectivity is limited (like defense, healthcare, or fieldwork).

The companies that embrace offline-first technology today will define the next era of AI innovation.

Final Thoughts

Offline-First AI Assistants mark a turning point in how we interact with technology. Instead of depending on distant servers, these systems bring intelligence closer – to your hands, your pocket, and your device.

They’re faster, safer, and more personal. As AI continues to evolve, the offline-first model won’t just be a feature—it will become the foundation of trustworthy, human-centered artificial intelligence.